Our coverage of the FAA’s Compliance Philosophy (April 2016 and April 2017 and in this issue) begs the question of how generally well-intentioned and experienced pilots fall out of compliance in the first place. A little research shows that falling onto the dark side can be slow and insidious with undesired side effects, eventually capable of triggering an incident or accident.

Not only pilots are affected. Noncompliance can worm its way into company and organizational cultures as well, sometimes with even more disastrous outcomes. Let’s dig into this to see how we can avoid the slippery slope of even initially innocent noncompliance that often ends in serious incidents or accidents.

Intentional Noncompliance

Intentional noncompliance is a product of the “Don’t tell me” anti-authority attitude. With respect to compliance, the attitude is closer to regarding rules and regulations as silly, too complicated or unnecessary. There is also the Law of Primacy: Change can be difficult to accept. “I like the way we did it before.”

There are many influences that push pilots to intentional noncompliance. Maybe a published procedure doesn’t work, takes too long or competes with more important priorities. That might provoke skipping the procedure as a shortcut to save time. “I just don’t like it. I’ve got a better way.” “This is a bad idea” soon degenerates into “I’m not going to do it.” Pilots thinking this way have developed a noncompliance mindset.

Deliberate noncompliance fails to see potential consequences that, in the extreme, can precipitate intentional recklessness and significant, but ignored risks.

GA and the airlines are too-often guilty of failing to fly stabilized approaches. That means: properly configured, on speed, on centerline and landing in the touchdown zone. Statistically, aside from takeoff, the greatest risk to any flight is during the last eight minutes.

One reason for unstable approaches is that there is no perceived penalty. It almost always works out fine. An airliner landed on its nosewheel at KLGA in 2013 after an approach in VMC that simply never got stable. The NTSB determined that the pilot should have aborted the landing. The nosewheel collapsed and the airplane was totaled. The captain, a ten-year veteran, was fired.

Failing to abort a landing is perhaps the most common form of deliberate noncompliance. The NTSB estimated in 2015 that 75 percent of all aviation accidents that it investigated contained cues that the aircraft should have gone around. Yet according to a Safety Summit in 2011, fully 97 percent of pilots continued landing despite unstable approaches.

Shudda Gone Around

In 1994, a Navajo Chieftain attempted a landing in Bridgeport, CT. It was night, the tower was closed and heavy fog allowed only about -mile visibility. The aircraft struck a metal barrier at the end of the runway and burst into flame. Of nine people aboard, one survived. According to the NTSB, the aircraft was on a VFR flight plan, and the pilot did not fly the published ILS. The Navajo was fast and high crossing the runway threshold, failed to go around and landed with only 936 feet of runway remaining. The NTSB speculated that the pilot was under pressure to deliver passengers at the airport to avoid inconveniencing them and incurring additional expenses by the carrier.

The takeaway: Mission completion seems to be one of the leading motivators of intentional noncompliance.

Fallout from Noncompliance

Intentionally noncompliant pilots are up to three times more likely to commit unintentional errors or mismanage threats to flight safety. As the number of intentionally noncompliant events rise, the number of mismanaged threats and errors increase at a rate of about four unintentional errors per intentional noncompliance. Compliant flights typically generate just two unintended errors.

An intentionally noncompliant error triggers an incorrect response to the error about a fifth of the time. Intentionally noncompliant examples include checklists performed from memory or skipped, failure to execute a required missed approach and performing tasks designed for the after-landing taxi while the aircraft is still on the runway after landing.

It’s Not All Bad

Intentional noncompliance takes several forms and not all of them are bad. Passive noncompliance occurs when a person simply ignores a directive. This can be dangerous because the individual is out of control. The same can be said of direct defiance. Then there is the simple (polite) refusal to comply: “Unable.” Saying no invokes your authority to decline something you consider unsafe or impractical. The last form, negotiation, as where you might negotiate a better route with a controller, is positive since the controller and pilot come to a mutually satisfactory solution.

Unintentional Noncompliance

You get ramped by a Fed. To your red-faced embarrassment, you don’t have your certificates with you. They’re in your hangar on the other side of the airport. Show the Fed, let him/her remind you that they must be on or near you when flying, and the day is saved.

Distracted or inattentive, you flew into airspace without permission. Depending on whether safety of flight was affected or how the TRACON radar manager is feeling that day, you might wind up in Compliance Corner or escape with a scolding.

In a collective setting, the major defense against unintentional compliance is that pilots and controllers look out for one another. Individually, checklists, flow checks and a systematic and meticulous approach to flying can help you avoid unintended compliance. It’s worth noting that checklist errors occur most often before takeoff.

Normalization of Deviance

On January 28, 1986, the Space Shuttle Challenger broke apart 73 seconds after liftoff from Cape Kennedy. The 24 previous shuttle launches were successful even though they had known leaks in the O-ring seals between rocket stages.

NASA knew this was wrong. Yet there seemed to be no adverse consequences, so the flights continued—until Challenger. In her 1996 book, The Challenger Launch Decision, sociologist Dr. Diane Vaughan analyzed why NASA permitted launches with defective O-rings to continue despite the likelihood that a disaster was ultimately inevitable.

Normalization of deviance, in her words is, “The gradual process through which unacceptable practices or standards become acceptable. As the deviant behavior is repeated without catastrophic results, it becomes the social norm for the organization.” The deviance spreads throughout the organization, infecting not just current employees, but new ones too: “We’ve always done it that way.”

Want some examples? Unstabilized approaches become the norm. The new vibration in your aircraft becomes the “new normal” until your mechanic discovers a sticky valve. The fact that Com 2 doesn’t have the range it used to becomes a harmless oddity until Com 1 takes a vacation.

NASA didn’t learn its lesson. Seven years later, Columbia came apart due to its damaged heat shield. You guessed it—shuttles returning with damaged heat shields had become the norm.

When pilots trade safety and accuracy for speed and efficiency, the slippery slope begins until the shortcut becomes the norm. One day something goes badly wrong and we come to our senses, wondering how we thought that was safe.

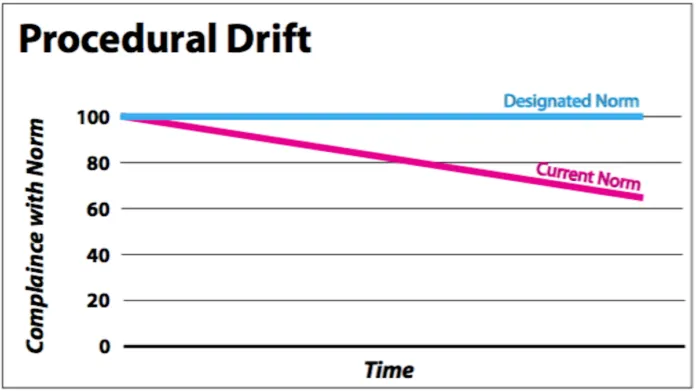

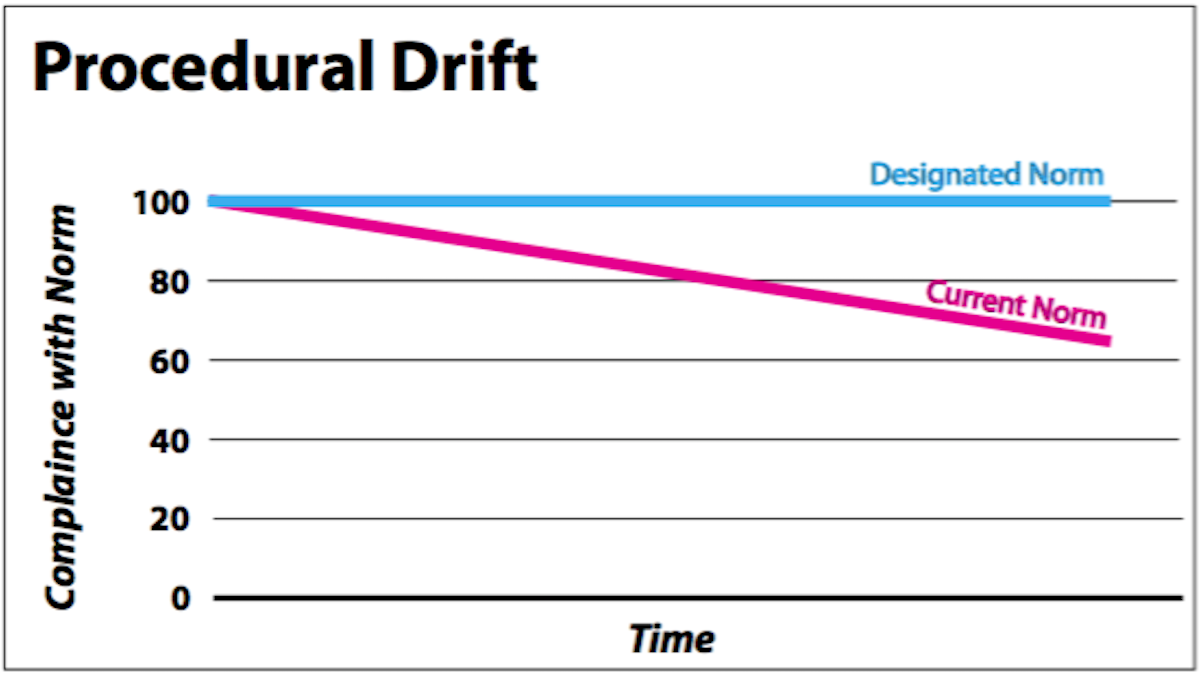

Procedural Drift

Given a set of written procedures as we have in the regs, the AIM, checklists, and the POH or even an SOP, there is a tendency to drift away from those procedures over time. Hence the term “procedural drift.” Performing a checklist from memory is but one example. What’s the point of a checklist if it doesn’t get used? The question to ask yourself is, “How far do I let myself drift?”

Simply put, procedural drift is the difference between “work as imagined” and “work as actually done” according to Dr. Stian Antonsen, a noted safety-culture researcher.

Let’s suppose that you acquire a new aircraft. It includes training at one of the major training centers. The profiles you fly, the standard operating procedures, and the system knowledge you acquire establish a baseline for performance. Yet over time, actual performance begins to drift from baseline.

Why is this so? The International Civil Aviation Organization (ICAO) suggests that the procedures learned cannot always be executed in real-life flying. Another reason is that procedures created might look good on paper and work in principle but not in practice. People being people, they will diverge from baseline to the extent needed to achieve the desired outcome.

ICAO realistically admits that all organizations operate with a degree of drift. Sometimes the drift strays from baseline and then varies around a point close to ideal. Worst-case, deviations can begin slowly, almost invisibly, then accelerate toward the line between safe and unsafe operation. Such was the case in the crash of N121JM.

Top Reasons for Procedural Drift (S. Dekker)

– Procedures are inaccurate or outdated

– Too time consuming—if followed, couldn’t get done in time

– People prefer to rely on their own skills and experience

– People assume they know what’s in the procedure

– Rules or procedures are over-designed and don’t match the way work is really done

– Past success (in deviating from the norm) is taken as an assurance of safety and becomes self-reinforcing

– Departures from the routine become routine and violations become compliant behavior

If They’d Only Run the Checklist

On the evening of May 31, 2014, N121JM, a Gulfstream Aerospace Corporation G-IV, crashed after it overran the end of Runway 11 during a rejected takeoff at KBED in Bedford, Massachusetts. All seven occupants perished.

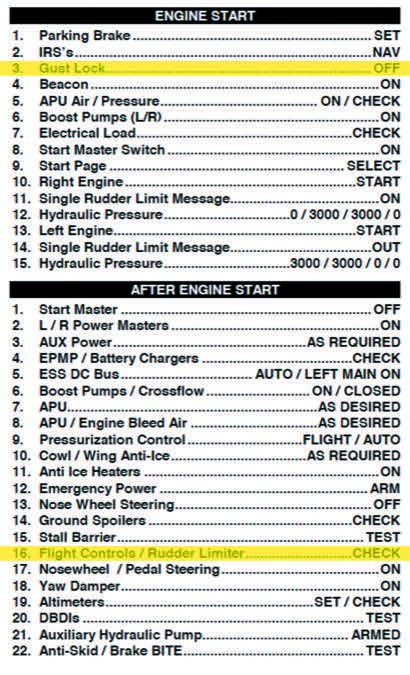

The NTSB determined the probable cause to be (in part), “the flight crewmembers’ failure to perform the flight control check before takeoff, their attempt to take off with the gust lock system engaged, and their delayed execution of a rejected takeoff after they became aware that the controls were locked. Contributing to the accident was the flight crew’s habitual noncompliance with checklists.”

This accident is explained by procedural drift. The crew was experienced and had flown together for about 12 years. Yet they neglected to release the gust lock. Running the Before Starting Engines checklist would have caught this mistake, but they didn’t run it.

Further, they did not call for checklists or even verbalize checklist items. Executing checklists silently and from memory bypasses many safety benefits. Their operations manual required flight crews to complete all appropriate checklists, but did not specify the preferred method of doing so.

The G-IV’s After Starting Engines checklist requires a flight control check. But no check was performed before the accident takeoff. All told, the crew had three chances to catch the error, but they missed all of them. Incredibly, the NTSB found that no complete check had been performed before 98 percent of the crew’s 175 previous takeoffs.

We know that procedural noncompliance can increase the likelihood of subsequent errors. Among the many reasons for noncompliance, explanations have included personality, workload and goal conflicts. There was no pressure on this crew. The NTSB noted that the consistency of the crew’s noncompliance suggested that the two pilots had developed shared attitudes in this regard. In this case, did familiarity breed contempt?

One insidious aspect of procedural drift is that when checks almost always reveal no issues, they may come to be regarded as less important to the point of meaningless. The fact that this crew was consistently paired may have increased risk, because another pilot would likely not have tolerated such behavior.

At the NTSB’s request, the NBAA reviewed 144,000 business-aircraft flights between 2011-2015. It showed that partial checks were performed before 16 percent of the takeoffs and in two percent no control checks were performed.

What do we learn from this? We learn that even capable, highly experienced pilots can devolve into corner-cutting complacency. As one commenter noted, an expert can believe that his/her knowledge offers license to deviate.

If we want to fly as the real professionals do, we need to be humble and respect the medium in which we fly. In the words of Wilbur Wright, “In flying I have learned that carelessness and overconfidence are usually far more dangerous than deliberately accepted risks.” Those are good words to live by.

The Bottom Line

The FAA knows all about procedural drift. It’s why they are so sticky about adherence to regulations. At one time, pilots routinely flew through a local Class D airspace without contacting the tower. While the tower was guilty of casual noncompliance, the practice became so rampant that the FAA cracked down and started violating errant pilots.

Before departure, is your weight and balance okay? Do you ever takeoff “just ten pounds” overweight? Do you skip calculating takeoff performance because you’ve safely it done it many times before? When things get busy or you’re in a hurry, do you skip reading the checklist? Are you unconsciously drifting toward the edge of safety? Think about it.

Fred Simonds, CFII has learned that undisciplined behavior will almost certainly bite back down the road. See his web page at www.fredonflying.com.