It’s time that we stop and look at how far we’ve come with computer forecast models. They’ve made a huge impact on aviation forecasting. If you just take the single-engine up for an hour on the weekend, you probably don’t have much need for the weather models, but if you do any sort of regular cross-country flying, chances are you’ve run across at least some of them.

Model data isn’t just for meteorologists, dispatchers, and weather briefers. There’s a vast ocean of products out there that are ready to use and can help you get the upper hand on the weather. Models have also achieved a high degree of skill at small scales. Many of our WX SMARTS articles over the years have helped explain the basics of how to read the maps and compare them against TAFs and actual weather. In this issue we’ll explain where all these weather charts come from in the first place, and review the many changes that have happened over the past decade.

What Are Models?

Forecast models are more formally known as numerical weather prediction (NWP) models. There are two main types: dynamical models, in which the equations of motion are solved directly, and statistical models, where mathematical or logical relationships based on prior events are used to solve the forecasts. Rules of thumb and analogs are the simplest examples of statistical models, but the vast majority of predictions we all encounter are made with dynamical models.

Numerical models were first developed in the early 20th century. Norwegian meteorologist Vilhelm Bjerknes theorized in 1904 that physics had advanced enough that forecasts could be solved with mathematics. Mathematician Lewis Fry Richardson actually tried to solve a test case in 1922. Using a 10-inch slide rule, it took him over a month to project weather six hours in the future. The process was painfully slow and Richardson encountered problems in the model that weren’t yet understood, so the idea was shelved.

When instrument flying expanded in the 1930s, the U.S. Weather Bureau was still focused mostly on agriculture and shipping. Airlines such as Delta, American, and United created their own meteorology departments and hired forecasters who were skilled at working with the charts subjectively, using a vast amount of experience and intuition to predict where the fronts and weather systems would end up. This was not always successful, and books like Ernest Gann’s “Fate Is The Hunter” regale readers with stories about early propliners encountering unexpected icing, surprise storms, and airports mysteriously socked in. These events continued to characterize aviation weather during the 1950s and early 1960s. The forecasts were getting better and the government worked to connect pilots with forecasters, but weather accidents were still a big problem.

Meanwhile, the first electronic computers had been developed during WWII, in part to design nuclear weapons. Meteorologists at Princeton University arranged for use of an Army ENIAC computer. They ran the first primitive numerical model in 1950, which was a success and validated the idea of numerical forecasting. Weather Bureau chief Francis Reichelderfer commented, “What we saw convinced us that a new era is opening in weather forecasting, an era which will be dependent on the use of high-speed automatic computers.” Unfortunately it became obvious that the system generated a lot of poor forecasts. These early models overpredicted cyclone development and were barely usable, and a lot of problems had to be solved.

During the 1950s these experimental models were refined and upgraded. Several large universities and national institutes across the United States collaborated on the problem. Meanwhile, the federal government set up the National Meteorological Center (NMC, the predecessor to NCEP, National Center for Environmental Prediction). The first operational forecast charts began flowing from NMC in the early 1960s.

The first “modern” forecast model dedicated to the United States was the LFM (Limited-area Fine Mesh) model, which was introduced in 1971 and would be run until the 1990s. A sophisticated global model called the GSM (Global Spectral Model) was introduced in 1980.

The advances we saw in the 1980s and 1990s brought increasingly fine scales as computer power expanded, and more sophisticated physics schemes accounted for processes taking place. Older models were updated while newer models were being developed.

Running a Model

Unfortunately numerical modelling is not as simple as piping the observations into the computer and clicking the “start” button on the model. There are nowhere near enough observations to get rid of statistical noise in the model fields, and vast areas of the ocean have no data at all. This unbalances the equations at many of the gridpoints, causing the model to quickly “blow up,” creating false artifacts and non-existent weather systems all over the charts.

As far back as the 1950s, modelers have solved this by introducing a first guess field. We simply run the model on a regular schedule, hourly to daily, and use forecast fields to estimate the current state of the atmosphere. This first guess field is then corrected with actual observations using a wide variety of statistical schemes. In this sense, a weather center is continuously correcting its models.

Once we have a properly balanced starting point, we can say that it is initialized. At that point, a segment of computer code known as the solver runs computations to predict the weather several minutes in the future, using time steps. With each time step, the equations are solved once again. This process, known as integration, repeats hundreds of times. Gradually the model advances hour by hour into the forecast future, generating gigabytes of output that can be used to construct forecast maps. Integration ends when the solver arrives at a predetermined end time, ranging from hours to weeks in the future. The complete series of forecasts is known as a model run.

Running a model isn’t as easy as solving the equations of motion. All models use parameterization that we simply call “physics.” These are components that estimate things like radiation, convection, microphysics in clouds, and interaction of weather and water with orography, vegetation, and land surfaces. Most of these elements are so complex that we can’t realistically model them, so the physics packages feed the model with sophisticated estimates at each gridpoint.

You don’t need to know everything under the hood, but one important measurement to know is horizontal resolution. With gridpoint models, resolution is the average spacing in kilometers between the gridpoints that make up the calculated data. The old ENIAC model from 1950 worked at a resolution of 736 km. By 1993 the Eta model was delivering 80 km resolution, while global models worked at 105 km. Today’s global models run at 12 km resolution, with the detailed HRRR model offering 3 km.

Ensemble Forecasting

Like it or not, you’re going to hear a lot more about ensemble forecasting in the 2020s. A single conventional model run is known as a deterministic prediction. During the 1970s and 1980s it used to take hours to run a single model. Computer power has increased exponentially, shrinking this time, and it now takes mere minutes to run a sophisticated model. So what will your supercomputer do with all that idle time?

Some bright researchers at the universities and weather centers realized that this extra time could be used to re-run the model multiple times, nudging the initial fields in some way (perturbations) or changing out various physics packages. Each of these adjustments produces a somewhat different forecast run. By running a large number of these modified initializations, we get an ensemble forecast made up of numerous ensemble members.

What do we do with ensembles? Good question—even forecasters are trying to learn how to best use them. Ensembles are great for seeing uncertainty in the output fields. When a feature like an upper-level low appears five days in the future, and is shown with the same location and intensity by all the ensemble members, we have high confidence in the model prediction in this area of the map.

Rather than comparing models side by side, we can use spaghetti plots. This is a type of chart where a couple of key lines are selected and drawn from all the ensemble members. When the spaghetti lines are chaotic and drawn everywhere, our confidence is low. But when they overlap tightly, we have high confidence. We can also see the range of forecast possibilities this way.

We can also combine all the ensemble members to get an average of the output field. This is called an ensemble mean, but it’s not often used since important details get smoothed out. We can also look at probabilistic values, median values, and other expressions.

Today

As of 2019, the National Center for Environmental Prediction (NCEP, formerly NMC) runs a large number of numerical models. Since 2016 the supercomputers have consisted of Cray XC40 and Dell PowerEdge systems with 14,000 terabytes of disk space and 5000 processors each. These computers are located in data centers in Reston, Virginia and Orlando, Florida.

The main workhorse is the Global Forecast System (GFS). This model runs four times a day covering the entire world. For decades, a large number of data products and aviation weather products have been built with it every day, in particular the standard ICAO winds aloft charts. If you’ve ever taken an airline flight across the Atlantic or Pacific, it’s likely that your jet’s fuel consumption, routing, and altitudes were based heavily on GFS output.

The GFS grew out of the old Global Spectral Model (GSM) that was introduced in 1980. Old timers may remember the AVN (aviation model), which was the name for a short-fuse GSM run for the aviation community before it was folded into the GFS in 2002. The GFS model underwent a major upgrade in June 2019 when the GSM was removed and replaced with the FV3 core, changing the global model from a spectral to a gridpoint model, and adding new physics. It forecasts out to 384 hours in the future, though anything past 180 hours is questionable.

Another very popular model type is the WRF, the Weather Research & Forecasting model. The WRF was first introduced in 2000 based on Penn State and UCAR’s MM5 model. It is not a global model but is designed for regional scales. What’s special about the WRF is it’s highly portable and configurable, designed to run on almost any computer, and this allows the physics packages to be easily customized.

NCEP recognized the power of the WRF model early on. Looking to phase out the older NGM and Eta models, it adopted the WRF in 2006 and added its own core. This became known as the WRF-NMM (Nonhydrostatic Mesoscale Model), which was updated to NMM-B in 2012. It is part of the NAM (North American Mesoscale) model package that focuses on weather at the national and state scale.

The non-NCEP model is known as the WRF-ARW (Advanced Research WRF) or simply the ARW. The ARW core is more powerful and has better forecast skill, while the NMM-B is simpler and computationally easier. It’s still possible to view ARW forecasts on other sites, though, and its good physics packages make it worth using.

As a pilot, one model you should be familiar with is the RAP (Rapid Refresh) model. This is similar to the old RUC (Rapid Update Cycle) model from the 1990s and 2000s. The RAP is a WRF-ARW model with physics packages built from the RUC. As its name suggests, the RAP is run once per hour, giving the latest look at forecast conditions. This rapid update scheme was designed especially to serve the needs of the aviation and severe weather communities.

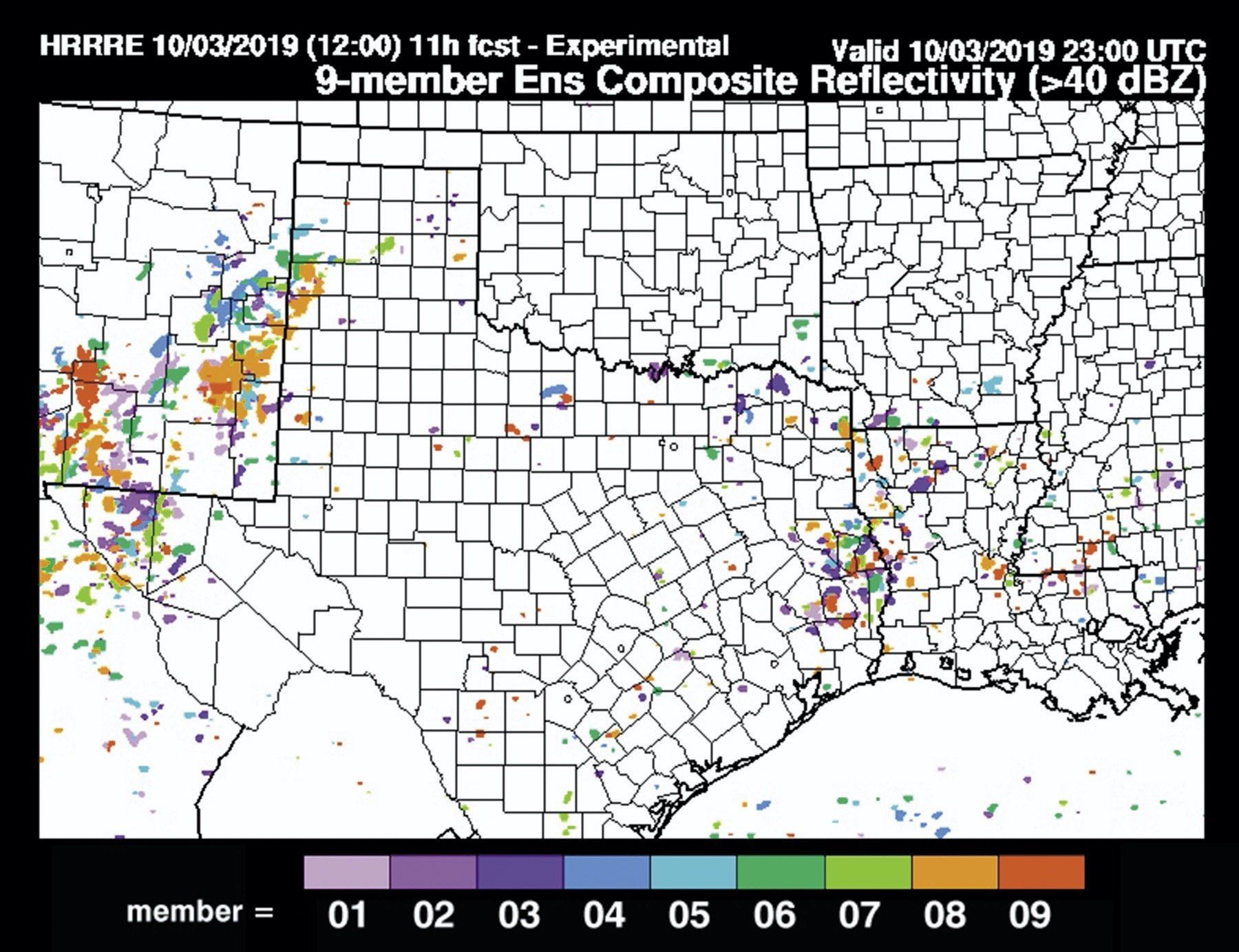

The RAP model also has a high-resolution subset called the HRRR (High Resolution Rapid Refresh). The HRRR uses gridpoints only 3 km apart, allowing it to model some of the processes in thunderstorm complexes, as well as modeling sea breezes and the intricate airflow in mountainous areas of the western United States and Appalachians.

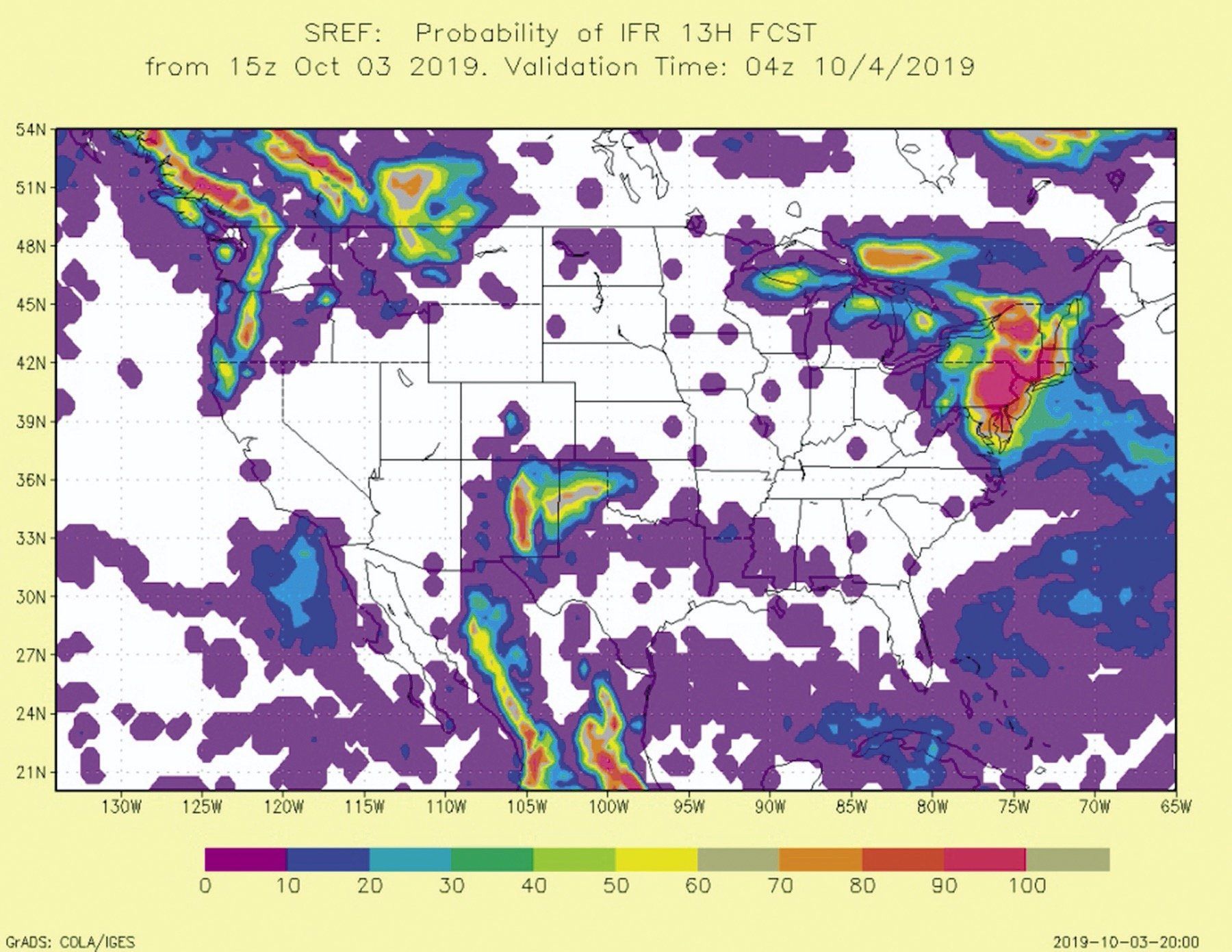

Most of today’s models are not just deterministic but provide ensembles, such as GEFS for the GFS, HRRE for the HRRR, and ENS for the European ECMWF HRES global model. There is also an ensemble system made up of multiple types of models called the SREF. It blends 27 members that include multiple types of WRF cores and physics packages, to show a wide range of possible weather. Some websites like SPC and EMC provide probabilities and spaghetti plots of aviation fields.

Viewing the Data

Models today offer far more than the traditional upper-level winds and pressure fields. Nowadays it’s routine for models to include simulated radar, aviation flight rule forecasts, ceiling, visibility, icing, and detailed precipitation. These fields are surprisingly accurate, if a bit noisy and inconsistent.

So which models should you look at? It’s actually pretty simple. The RAP and HRRR are by far the best models to use when planning a flight within 12 hours over the United States. If that doesn’t help you, you’ll want to choose the NAM. If your flight is than three or more days away or anywhere outside North America, choose the GFS. If there’s an extra 15 minutes and the weather is especially important, dig into the ensembles.

Some sources of data are provided in the table. With all of the sites, look for domain selection dropdowns. These are important for allowing you to “zoom” to your area of interest and see more detail. For example, if you’re in Illinois and browsing the HRRR site, you’ll want to click the “NC” domain.

You should now have a better idea of how the various model forecasts work. They’re exciting tools and I hope you discover ways that they can help supplement your trip planning and enhance your weather briefings. We will only see more and more model data in the years ahead, so now’s the time to dig in and learn about what’s available.

Tim Vasquez is a former Air Force meteorologist who remembers wondering in 1997 whether models were reaching their limits. He’s had a change of heart and feels we are living in exciting times.

Resources

HRRE

rapidrefresh.noaa.gov/hrrr/HRRRE.

HRRR and RAP

International models

www.eurowx.com. ($)

All NCEP models

pivotalweather.com. (best)

mag.ncep.noaa.gov. (official)

NSSL WRF-ARW

SREF

It appears that all of the hyperlinks contain a prefix of localhost8080/ which renders them unusable. Remove this prefix and the remaining URL will take you to where you want to go.

Tim writes great articles from which I learn a lot, making my tasks of aviating a variety of GA craft, of briefing hot air balloon events and of giving talks on weather to pilot organizations and safety seminars much more informed.

From an operators perspective.. Drowning in ever increasing number of charts and forecast data can quickly become mind numbing.. It falls back on the accuracy of the forecast, and yes of course, graphical presentation. As well as that old measure of flight knowledge and experience.. There is no substitute for a good operator, and their individual forte for what works for them..